Why Edge Computing Is Making Embedded Systems Better in 2025

Edge computing has revolutionised embedded systems with remarkable growth ahead. The embedded systems market will reach $116.2 billion by 2027, with a 6.1% CAGR. Global spending on edge computing will hit $200 billion in 2024. This is a big deal as it means that previous year’s spending by 15.4%.

What is edge computing exactly? The system processes data at the network’s “edge” – right where it originates – instead of sending it to distant cloud servers. This approach enables faster response times and quick decision-making. These capabilities are vital for autonomous vehicles, smart cities, and industrial automation. Edge processing will handle over 75% of IoT-generated data by 2027. This change will reduce cloud dependency and speed up response times.

This piece dives into how edge computing changes embedded systems in 2025. The focus lies on technologies that drive this transformation, like mobile edge computing with 5G connectivity, along with real-life edge computing examples in industries of all types. The future looks promising as more than 3 billion IoT devices will connect through 5G by 2028. This advancement will accelerate breakthroughs in autonomous systems and industrial automation.

How edge computing is changing embedded systems

Edge computing and embedded systems are joining forces to create a fundamental change in device data processing. These systems now process data closer to its source, which changes how embedded systems work in three important ways.

Faster processing at the source

Smart systems now bring intelligence right to where data is created. These systems don’t just collect and send raw data anymore – they process it right there. This change makes a big difference, especially when you have critical systems like self-driving cars, factory automation, and smart cities where speed matters most.

Today’s embedded systems at the edge can work on their own. They keep essential services running even when cloud connections fail. The systems analyse sensor data, make choices, and take action all by themselves right where the data starts. By 2027, all but one of these data points from IoT devices will be processed at the edge. This shows a major change in how systems are built.

Reduced latency in real-time applications

Edge computing’s biggest effect on embedded systems is the huge drop in delay times. Local data processing lets edge devices respond much faster – a vital factor for time-sensitive tasks. Research has showed that processing at the edge cuts response times by 80-95% compared to cloud processing.

This quick processing power changes how split-second decisions are made:

- Medical devices can watch and study patient data live to help doctors act fast

- Self-driving cars can process sensor data and make instant choices for safer driving

- Factory equipment can spot problems and shut down before damage happens

Tests prove these edge-enabled systems respond quickly by handling data where it’s created.

Lower bandwidth and cloud dependency

Edge computing changes how embedded systems talk to the cloud. Local analysis lets systems philtre and process data before sending it out, which cuts down bandwidth needs. This smart approach to sending data can reduce network traffic by 30-90%, based on what the system does and what kind of data it handles.

Edge computing also helps embedded systems work well even with spotty connections. This approach cuts bandwidth costs and keeps data private since sensitive information stays on the device. Smart security cameras with edge AI are a good example – they analyse video locally and only send important clips or alerts to the cloud instead of streaming everything.

Key technologies driving the shift

The technology that powers edge computing in embedded systems continues to grow rapidly. Several breakthrough technologies are reshaping this scene in 2025.

AI at the edge: TinyML and smart microcontrollers

TinyML brings together machine learning and embedded systems. This technology adds intelligence directly to ultra-low power devices that have limited resources. Neural networks can now run on microcontrollers with just a few kilobytes of RAM. The global edge AI market will likely reach AUD 96.22 billion by 2030. Experts predict an impressive growth rate of 20.1% CAGR.

Modern microcontrollers now come with AI accelerators like neural processing units (NPUs). These units make on-device machine learning possible:

- STM32 series comes with AI accelerators built specifically to run machine learning algorithms on the device

- STMicroelectronics’ STM32Cube.AI turns trained neural networks into optimised code for microcontrollers

- STM32N6’s Neural-ART Accelerator delivers 600 GOPS at 3 TOPS/W energy efficiency

5G and mobile edge computing

5G technology plays a key role in advancing edge computing by providing unmatched connectivity. 5G achieves latency under 1 millisecond compared to 4G’s 20-30ms. This speed allows AI-powered devices and edge systems to exchange data instantly.

5G and edge computing create a powerful combination. Mobile edge computing (MEC) moves processing closer to cellular base stations. This setup helps applications that need instant responses. The system processes terabytes of data within milliseconds. Such speed is crucial for autonomous vehicles, industrial automation, and smart cities.

Ultra-low-power processors for longer device life

Battery-powered embedded edge devices need excellent energy efficiency. Neuromorphic computing offers a groundbreaking solution. This approach copies brain functions through compute-in-memory architectures and event-driven processing. The result is much lower power consumption.

BrainChip’s Akida Pico represents recent innovation in this field. It runs on less than 1 milliwatt, making it perfect for battery-powered devices. Manufacturers also add advanced power management features like deep sleep modes and adaptive voltage control. These features create “deploy-and-forget” devices that run for many years on a single battery.

These advances create an ecosystem where intelligence moves from central cloud systems to scattered edge devices. This shift changes how embedded systems work fundamentally.

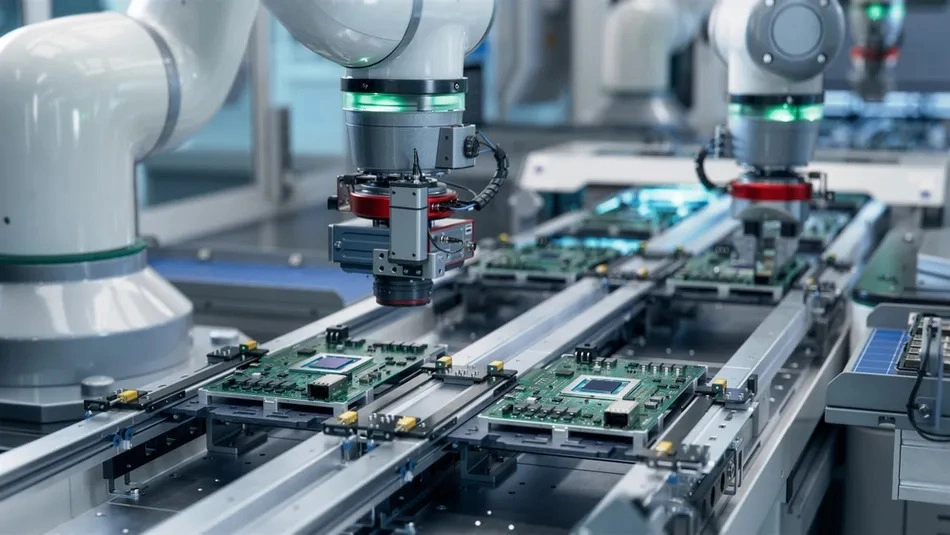

Real-world examples of edge computing in embedded systems

Ground implementation of edge computing in embedded systems shows practical benefits in many industries. These applications prove how local data processing gives clear advantages in critical scenarios.

Autonomous vehicles and live decision-making

Edge computing helps autonomous vehicles make split-second decisions by processing sensor data on-site. A vehicle can recognise danger and brake within milliseconds when a pedestrian steps into the road. This happens without waiting for cloud processing. Tesla’s Autopilot processes camera and sensor data inside the vehicle and ensures safe operation even with poor internet connectivity.

Smart healthcare devices with local diagnostics

Edge-enabled monitoring systems in healthcare analyse patient data on the spot and trigger alerts when readings cross critical thresholds. Wearable devices track vital signs continuously. Edge computing spots anomalies like irregular heart rhythms right away. Medtronic employs edge computing in insulin pumps to adjust doses live, which improves diabetes management.

Industrial automation with predictive maintenance

Edge AI helps predict equipment failures before they happen. Edge systems exploit sensor data to spot failures based on patterns and anomalies instead of using reactive or scheduled maintenance. Edge AI lets autonomous surface vehicles detect threats without cloud connectivity in maritime applications.

Smart cities and traffic management

Smart traffic systems use edge computing to analyse intersection data and adjust signals dynamically. This optimises vehicle flow. Barcelona’s city-wide sensors send traffic data to local edge servers. Quick signal adjustments have cut congestion during peak hours.

What to expect in 2025 and beyond

The digital world of embedded systems keeps evolving with edge computing’s maturity. The technology’s future through 2025 and beyond will be shaped by several important developments.

More hybrid edge-cloud architectures

Edge and cloud integration defines the future path. Hybrid edge-cloud architectures let critical decisions happen at the time they’re needed, while the cloud handles strategic data analysis. This balanced approach will give organisations flexibility, scalability, and cost benefits. Edge devices can act as servers when possible in hybrid models. This creates computing environments that grow naturally as new devices join. The architecture helps cut cloud hosting costs, bandwidth usage, and energy consumption.

Wider adoption of post-quantum security

Traditional cryptography faces risks as quantum computing advances. Post-quantum cryptography (PQC) has become crucial for embedded systems. NIST has standardised algorithms like CRYSTALS-Kyber and CRYSTALS-Dilithium. These quantum-resistant methods protect against “harvest now, decrypt later” threats. Intel and NXP will add PQC support to their roadmaps by 2025. They’ll offer specialised libraries optimised for devices with limited resources.

Standardisation of edge computing frameworks

The industry’s standardisation efforts have picked up speed. The 6-month old CEN-CLC JTC 25 “Data management, Dataspaces, Cloud and Edge” tackles edge computing standardisation. These projects focus on placing compute resources at the best processing points in the computing continuum. The standards help blend different types of systems smoothly.

Growth in edge AI model deployment

The Edge AI chips market shows strong potential. It should reach AUD 10.83 billion by 2025 and grow to AUD 87.15 billion by 2030. Multimodal and shared models, better hardware accelerators, and hybrid designs will shape IoT and EV systems. Developers will split large modular models between edge and cloud layers more often.

Conclusion

Edge computing has revolutionised embedded systems over the last several years through 2025. A fundamental change from cloud-dependent architectures to intelligent edge devices delivers major benefits to industries of all types. These changes have led to significant improvements in processing speed, reduced latency, and optimised bandwidth.

Cloud computing maintains its crucial role, yet edge-enabled embedded systems now process 75% of IoT-generated data at the source. This advancement enables autonomous vehicles to make split-second decisions. Healthcare devices can detect anomalies without delay, and industrial systems predict failures before they happen.

Technical foundations behind this evolution keep advancing faster. TinyML now brings AI capabilities to microcontrollers that have minimal resources. 5G connectivity delivers ultra-fast data exchange needed for up-to-the-minute applications. Ultra-low-power processors also extend device lifespans, which makes “deploy-and-forget” installations practical for the first time.

Hybrid edge-cloud architectures will become standard practise rather than competing approaches. Post-quantum security measures will protect sensitive data as quantum computing progresses. Standardisation efforts will make integration simpler between different systems.

Edge computing’s expansion will persist as AI deployment gains momentum and more devices gain edge processing capabilities. Developers and businesses must understand these trends to prepare for a future where intelligence lives at the network edge. This shift will forever alter embedded systems’ design and implementation.

FAQs

Q1. Is edge computing still relevant in 2025? Yes, edge computing remains highly relevant in 2025. It’s particularly crucial for applications requiring real-time processing, low latency, and data privacy. Industries like autonomous vehicles, healthcare, and industrial automation are increasingly adopting edge computing to enhance performance and reduce dependency on cloud services.

Q2. What are the main benefits of edge computing for embedded systems? Edge computing offers several advantages for embedded systems, including faster processing at the data source, reduced latency for real-time applications, and lower bandwidth requirements. It also enables devices to function effectively with intermittent connectivity and enhances data privacy by processing sensitive information locally.

Q3. How is AI being integrated into edge computing devices? AI is being integrated into edge devices through technologies like TinyML and smart microcontrollers. These advancements allow neural networks to run on devices with limited resources, enabling on-device machine learning. AI accelerators and neural processing units are now being incorporated into microcontrollers, making edge AI more accessible and efficient.

Q4. What role does 5G play in the advancement of edge computing? 5G technology is a critical enabler for edge computing, offering ultra-low latency and high-speed connectivity. It facilitates real-time data exchange between AI-powered devices and edge computing systems. The combination of 5G and edge computing creates powerful synergies, particularly in applications like autonomous vehicles and smart cities that require instantaneous responses.

Q5. What future developments can we expect in edge computing? In the coming years, we can anticipate more hybrid edge-cloud architectures, wider adoption of post-quantum security measures, and increased standardisation of edge computing frameworks. There will also be significant growth in edge AI model deployment, with the market for edge AI chips projected to reach billions by 2030. These developments will further enhance the capabilities and applications of edge computing in embedded systems.